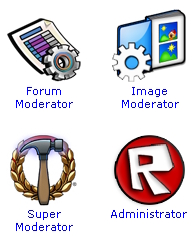

Moderation

Moderation is the general term for anything relating to reports, image moderation or anything else that passes the Moderators. Roblox has a very large moderation system, most of the content uploaded by users passes through a human to check and see if it's OK for Roblox.

Comments, In-Game Chat and General Text

Roblox uses several systems to take care of the massive amounts of text that is sent on our site every day. To make sure that everyone is following the rules and that inappropriate content is not allowed on our site we monitor Personal Messages, Forums posts and Comments passively, meaning that if a bad word or bad phrase appears it will be removed and immediately shown to a Moderator.

Additionally, almost every piece of text that a user sees on the website has a Report Abuse button right next to it, and a big one is available in game. If a user ever encounters something that is against the rules or something the user feels should not be allowed he or she is welcome to use the Report button to bring it to a Moderator's attention.

Images

All, every single one, images go through a main Image Queuing System for moderators to scrutinize before they are allowed on the website. Before moderation takes place an image is not shown on the site and cannot be accessed in the game. This prevents inappropriate content from entering the site.

Report System

When a report is generated either by our system watching for inappropriate words or by users in games and on the website, within 15 minutes the report is reviewed by an actual Moderator and appropriate action is given if the account is actually doing something wrong. Any "False Reports", or reports on people who have not broke the rules are ignored and closed, we take no action on those accounts.